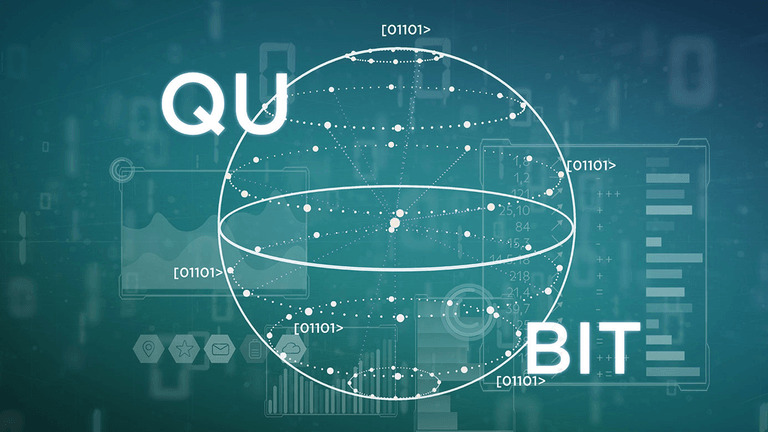

After an exhausting and hectic week of ‘Work from Home’, your mind is too busy planning the most awesome relaxing weekend you deserve! With Govt. guidelines echoing in your ears after hearing the same caller tune for the 17th time, you choose to follow them (Like you had any choice!😜). Rather than focusing on the fact, you decide to act. With a popcorn tub in one hand and a remote in the other, you are finally set to watch the new series your friends were talking about. A single click elevates the whole Living room into a different zone. A perfectly placed wallpaper on the Wall turns Vantablack and a 4-letter futuristic word: ‘QLED‘ appears on the screen with a tag-line- “Experience the Immersive.”

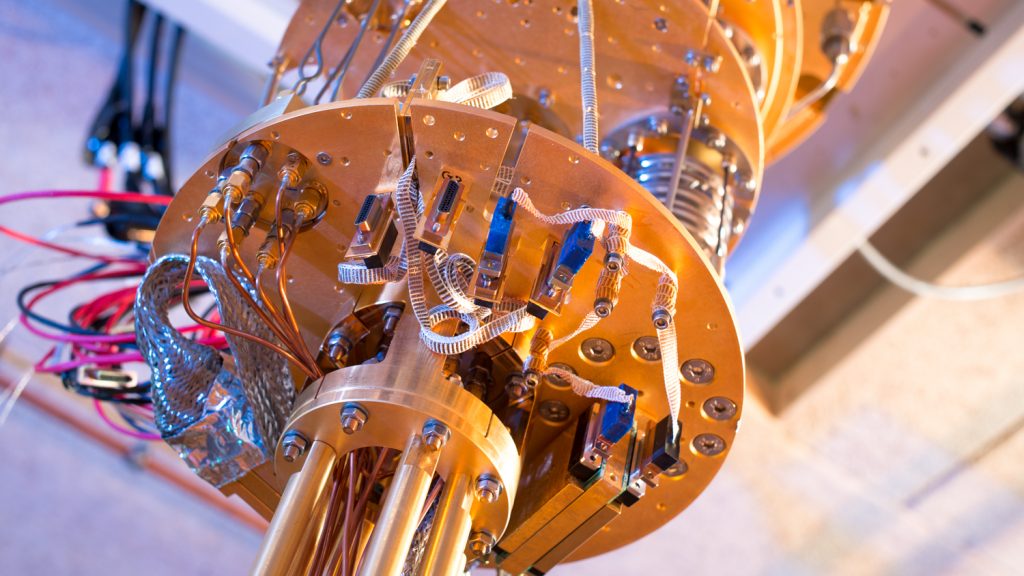

But QLED’s been invented and is in the market, right?!🤨 But this is a whole new different level of QLED not some fancy 2000$ 8K TV but a marvel straight outta the Stark Industries’ lab. This is a Quantum LED TV.

Tearing Down An OLED

The Screen has become our “Window to The Other World” or the second set of eyes like my grandparents used to say. (or mock!😞) As simple as the design of this tech seems, the more complicated it becomes when you try to scale it down or quantamize it. QLED has been long sought to the very foundation of flexible electronics.

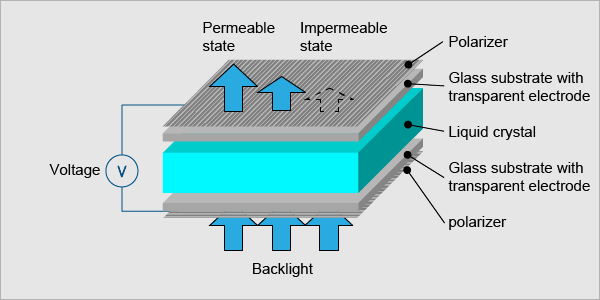

To oversimplify a bit of touch here. Let’s start with the most widely used panel of the market- LCD or Liquid Crystal Display. The name often carries a misconception with it that Liquid Crystal is not a plasma but a solution. The basic working of LCD consists of two polarizers and a layer of a nematic liquid crystal is applied to one of them and the whole system sandwiched between two electrodes. The source of light is a backlight generally a white light (with different shades of RGB). It is allowed to pass through a polarizing plane (horizontal), then through the liquid in which molecules align themselves to twist the light at 90° and the light passes at the other end of the polarizer (vertical).

The main principle of LCD is ‘light blocking’. When a current is applied the crystals untwist and the light passes straight and is blocked at the other end so the pixel seems to be dark. A red, green, and blue filter sits on top and converts incoming light into the specified color, these are called Sub-pixels. The millions of pixels filters and recombine the light to produce the right blend of shades on the screen. These are the very basics of working of LCD.

Now, every tech has its cons, and LCD had some major issues in energy wastage, it couldn’t express true deep black levels and are rigid. A need for new technology that could replace LCD in the foreseeable future gave birth to OLED or Organic Light Emitting Diode Device.

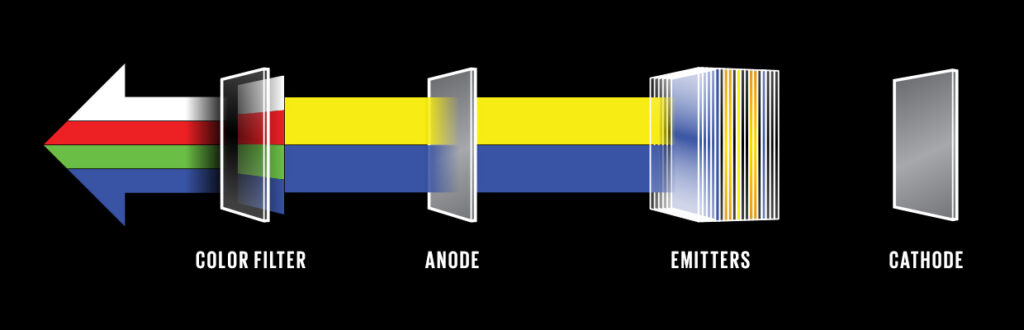

Unlike LCD, OLED does not use a backlight. It is an emissive technology in which sub-pixels themselves emit red, green, and blue light. The fundamental structure of OLED has an anode (transparent) attached to a substrate, and a conductive layer comprising of organic material is coated on it. Another organic material makes the emissive layer and a cathode sits on the top. When current is applied the anode is positive w.r.t to the cathode so e–s travels from cathode to anode. The conductive layer starts to provide anode with e–s while the emissive layer captures the flow of e– and becomes negatively charged.

With a conductive layer rich with holes (or short of e–) and emissive layer rich in e–s, the holes being more mobile travel towards the emissive layer. The holes and e–s recombine due to electrostatic forces and light is produced in the emissive region. The light then passes through colored sub-pixels producing a sharp and crispy image on the screen. Adding more and more layers increases the efficiency of OLED. This tech gives an ultra-thin display, superb black levels, and blazingly fast refresh speeds.

Is this is the end of the road? Na, otherwise why would I be writing this article. But OLED also had energy problems and manufacturing it is pretty expensive. That’s why the path to tackle the problem LED to the fusion of Quantum dots and OLED dubbed as ‘QLED’.

The Quantum Dot Future And QLED

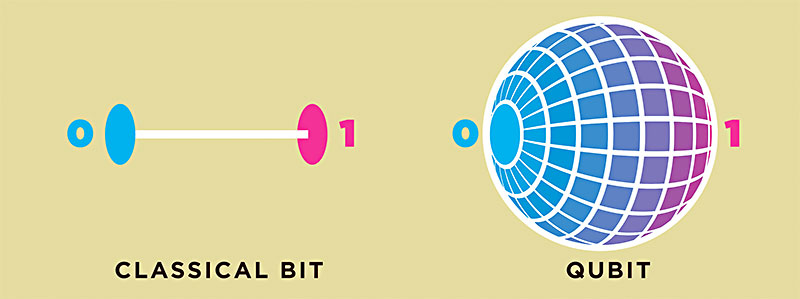

But, what the heck is a quantum dot?!🤔

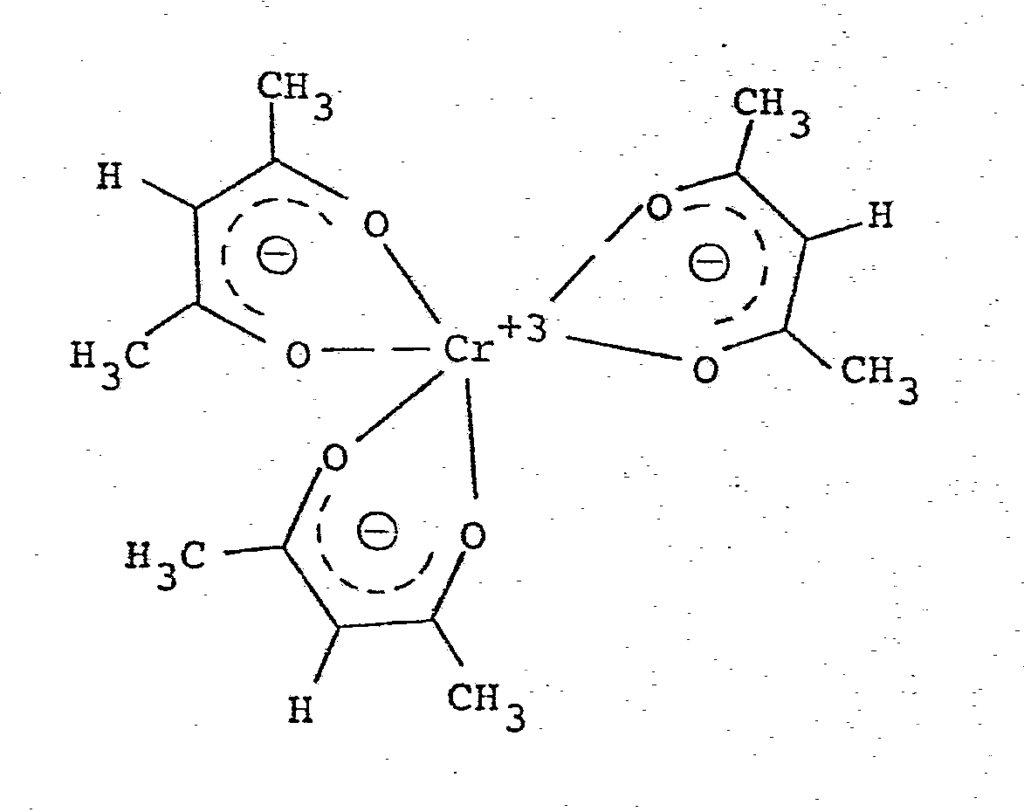

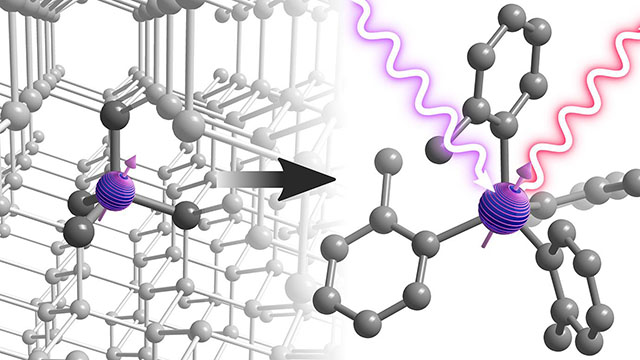

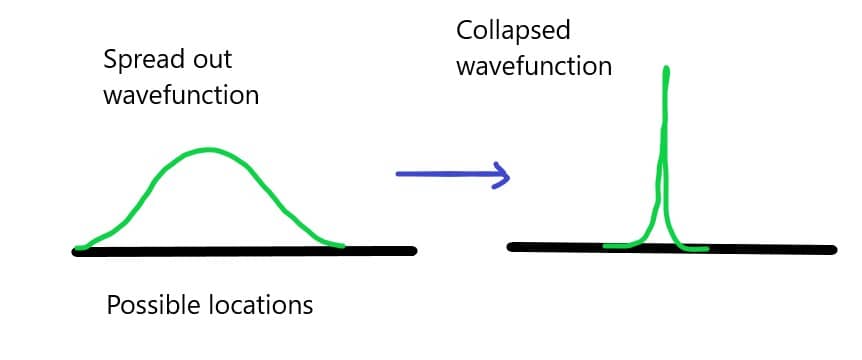

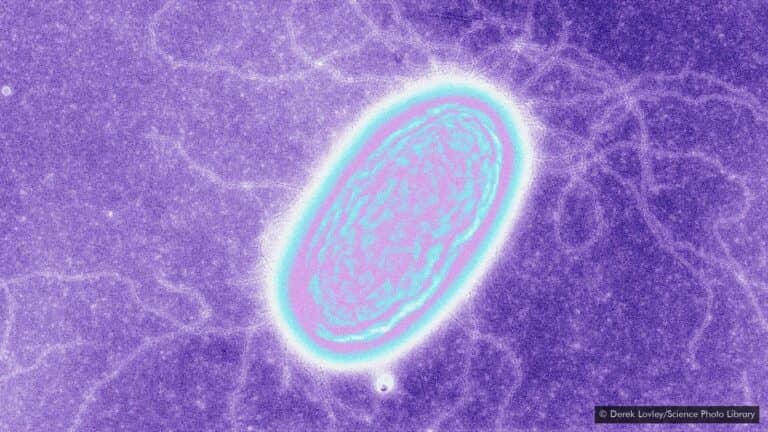

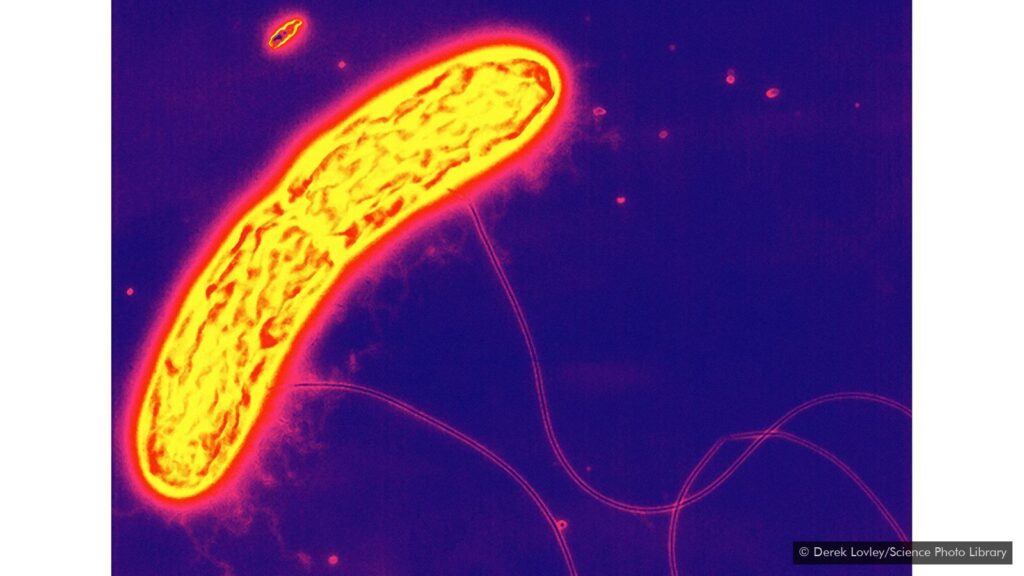

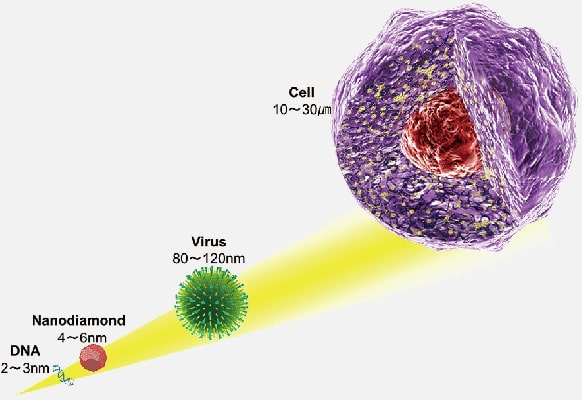

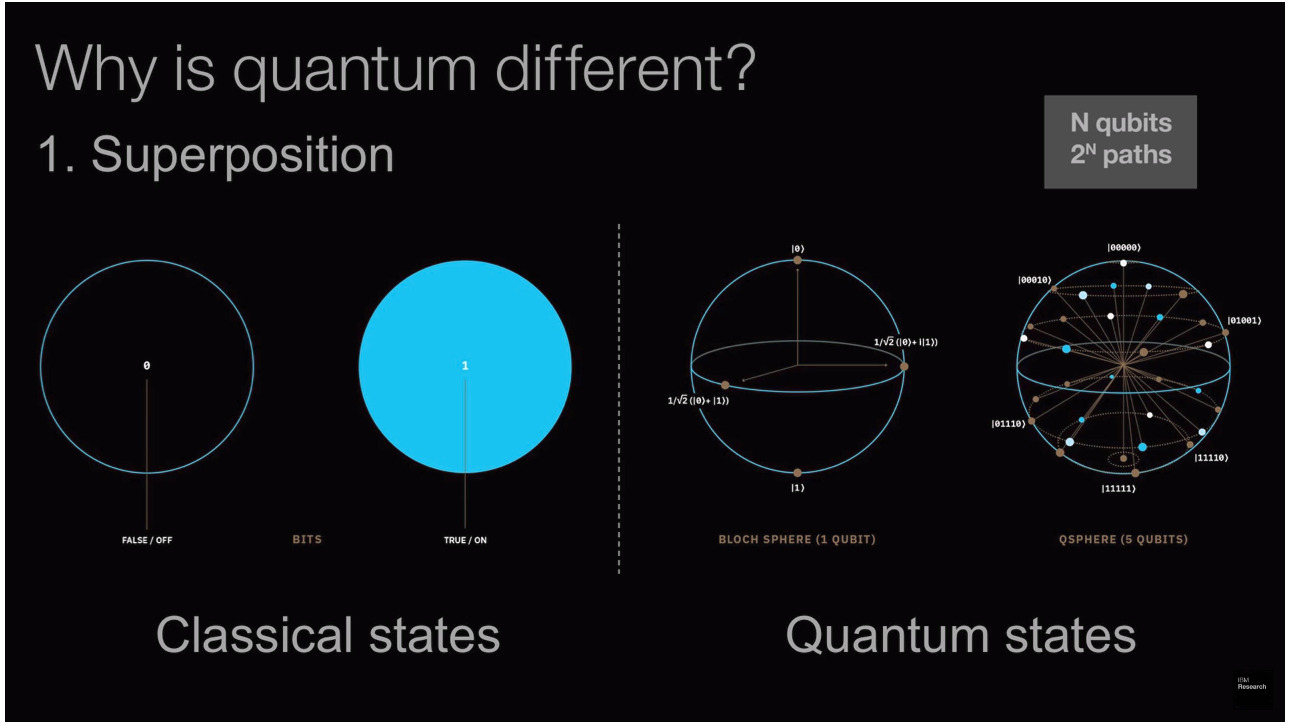

Typically a few nanometres in size, quantum dots are tiny semiconductors made of zinc selenide or cadmium selenide. However, its main property is its ability to convert short wavelengths (blue- 450 to 495 nm) to nearly any color in visible spectra. When a photon hits a quantum dot an e– – hole pair is created. The pair recombines to emit a new photon-based upon the size of a quantum dot. Bigger emit longer wavelengths close to red (620-750 nm) while smaller ones emit shorter wavelengths closer to the violet end (380-450 nm). This type of tunability offers a wide range of scope to perform research and apply it in various fields.

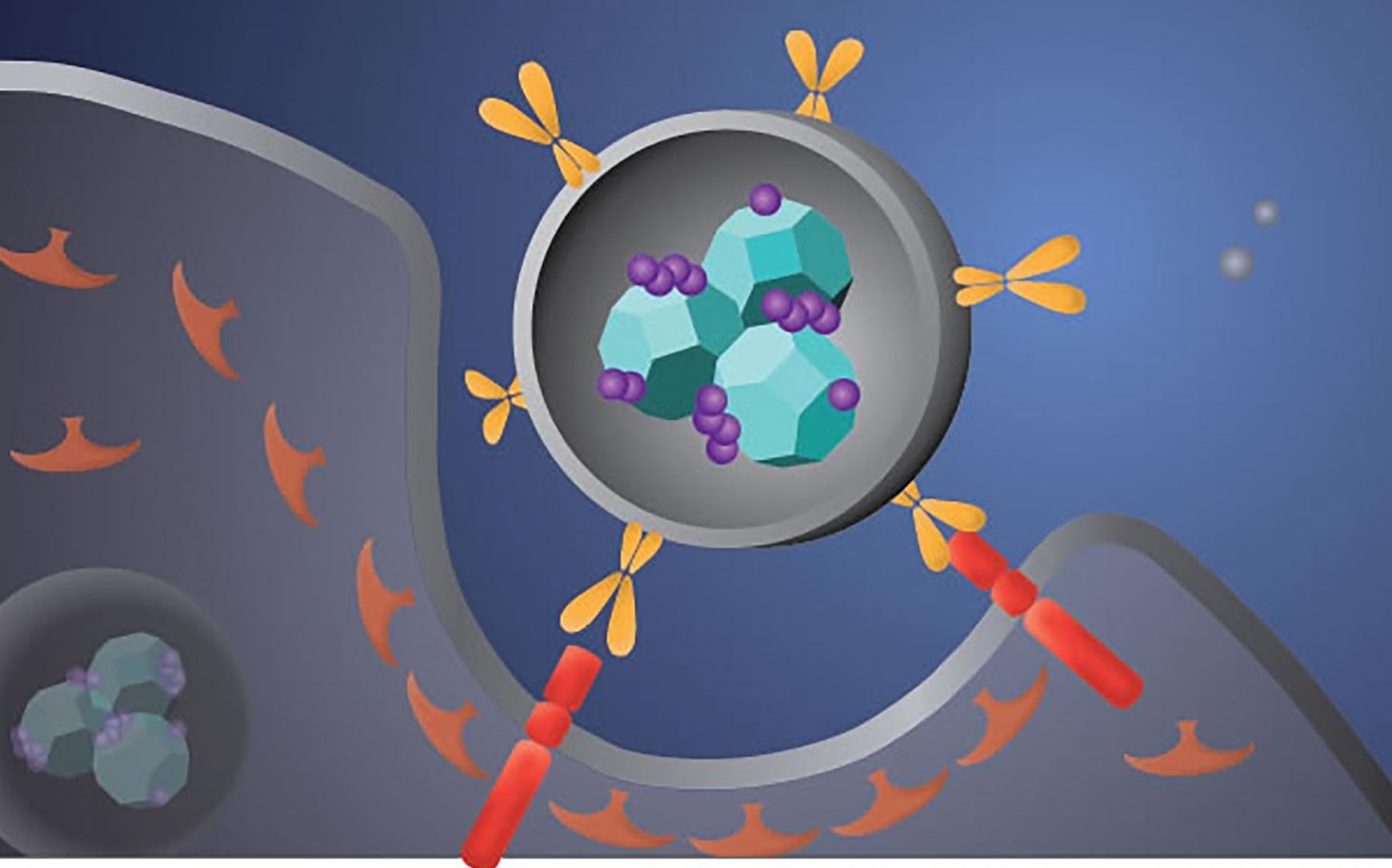

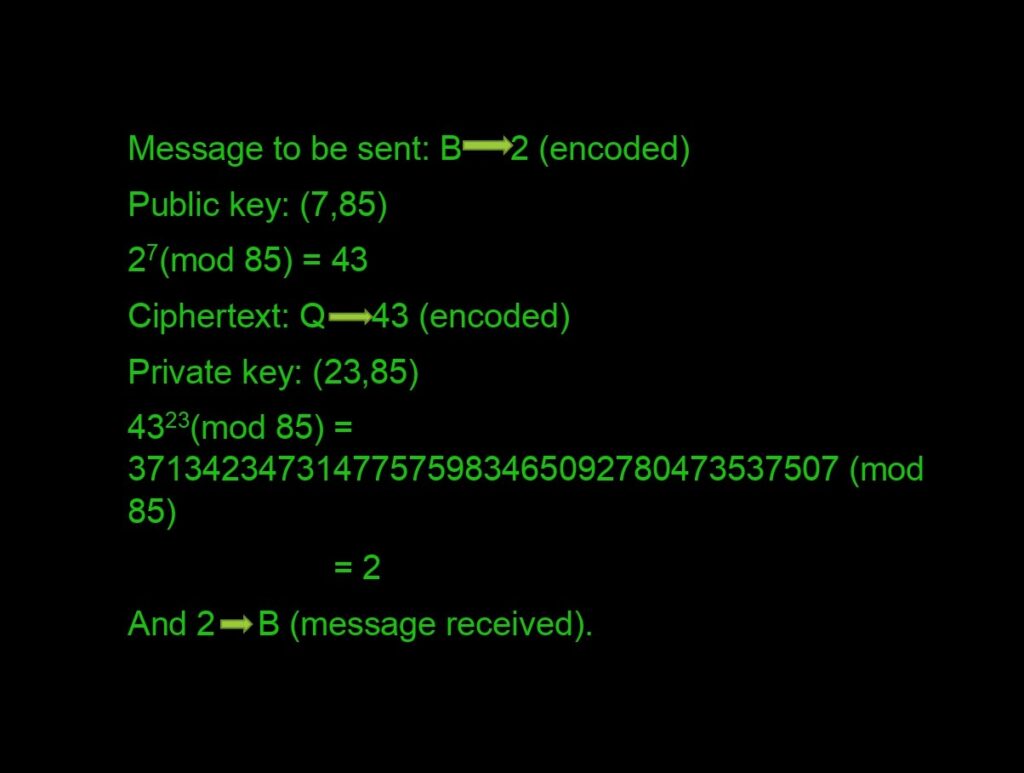

What do these dots have to do with the picture on your screen? Today, quantum dots are used to increase the efficiency of LCD by two-fold. Introducing dots into both LCD and OLED inspired two new enhanced panels- PE-QD TV and EE-QLED (or QD-LED).

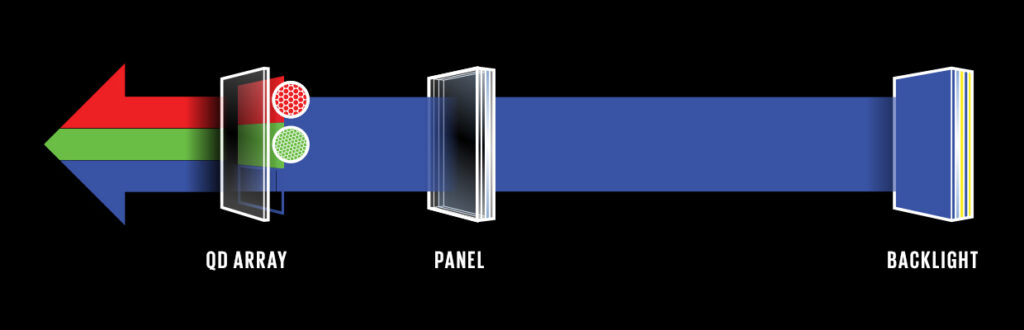

The major difference between the two is the Source of light. In a Photo-emissive QD display, a blue LED backlight is used with red and green quantum dots. The blue sub-pixels are left transparent while red and green converts blue photons into subsequent colors. The light being pure requires no filtering increasing the efficiency of the display to 99%. Since quantum dots are produced in a solution, they can be cheaply and perfectly printed using inkjet and transfer printing. Finally, manufacturing tough Flexible electronics can be cost-effective, energy-saving as well as streamlined. (read more about it here)

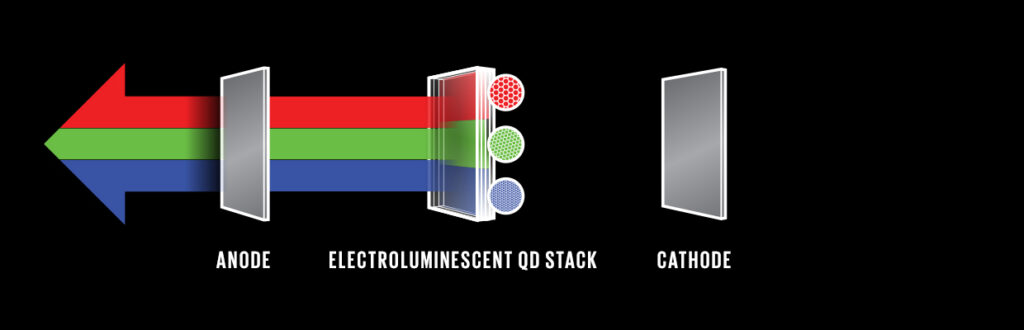

Photo-emissive QD TV is just an interim step for the display industry. The Quantum leap for the display industry is Electro-emissive OLED or QD TV. In this quantum dots are excited by electrons rather than photons from the backlight. These dots can produce the exact shade of color with absolutely no loss of light. Since there is no backlight, there’s no light leakage hence it can simulate a vantablack darkness. These QLED screens use less energy, cost, and are brighter than OLED with wide viewing angles too! Considering these panels are easy to fabricate and uses inorganic materials, QLEDs have the benefit of enjoying longer lifetimes than OLEDs.

Samsung has been on the run to produce the first-ever commercial QLED TV but it’s still in the early development phase, so can’t expect to see them in the market just yet. But with the global pandemic shutting us in our homes lying on a comfy sofa, TV became an integral element that kept us (or just me!) going through this lock-down. Let’s hope they develop the QLED TV at a fast-track pace so that we can enjoy another movie thanks to Quantum dots!

What is now Proved was once only Imagined!

William Blake