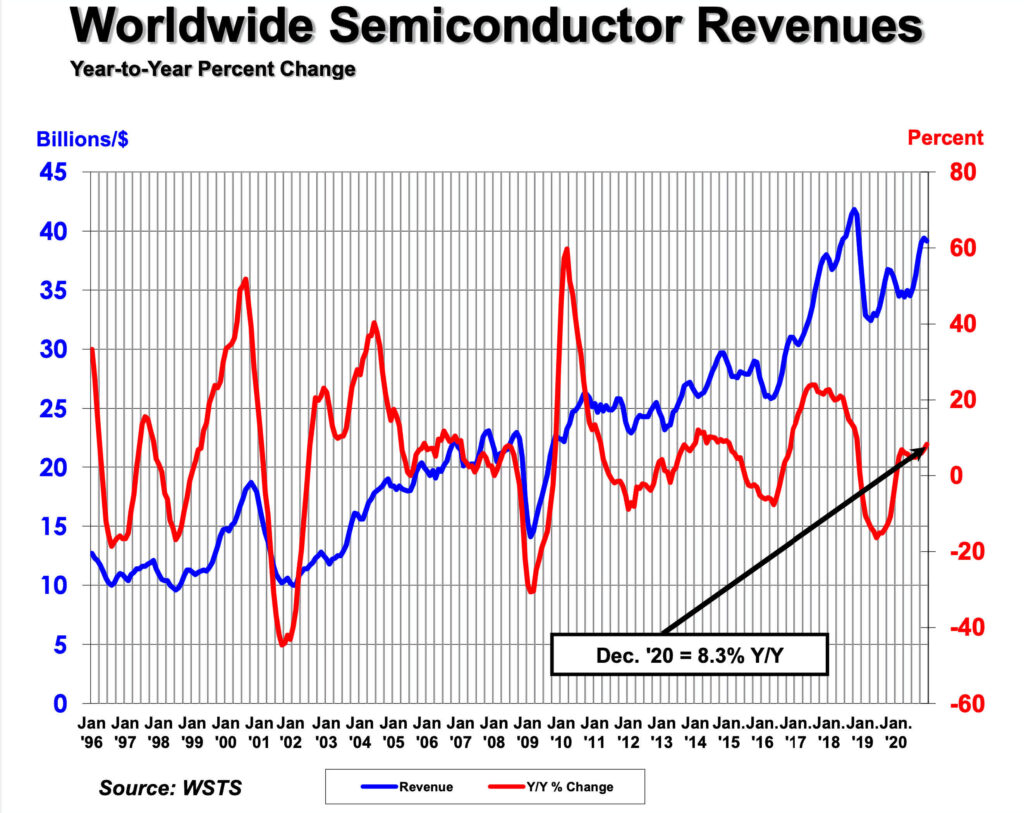

Ever since the beginning of Gen-Z, Semiconductors have been upgrading, updating and emerging in every aspect. AI, technology, automobile, robotics and every other ultra-modern advancement has semiconductor fitted. And silicon, being heart of every semiconductor chip component; demand has surged insanely all over the globe. With limited number of manufacturers and considerably less amount of production due to the corona virus pandemic, this high-demand generated huge amount of chip shortage.

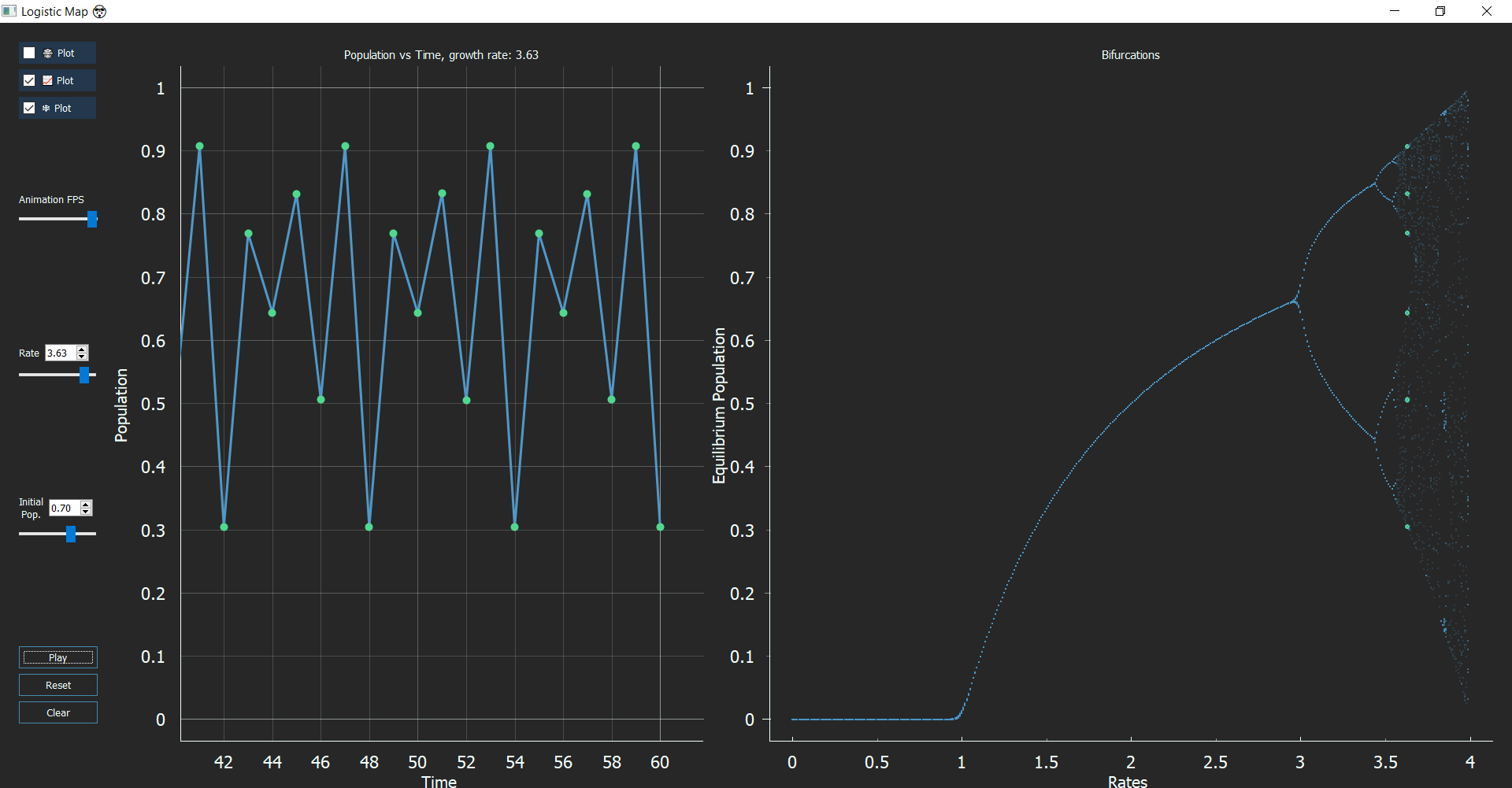

But the interesting part strikes here. Compared to 2019, there was an increase of 6.5% global semiconductors sales of nearly $439 billion dollars, in 2020. So, what exactly caused this demand-supply mismatch? What is the US planning to do to this which can affect the entire semiconductor industry? Is China playing a role in this situation?

Prior to answering and analyzing all this, let me cover few segments which leads us to understand the international politics and economy.

[the_ad id=”7507″]

The Corona Impact: It is not what it is…

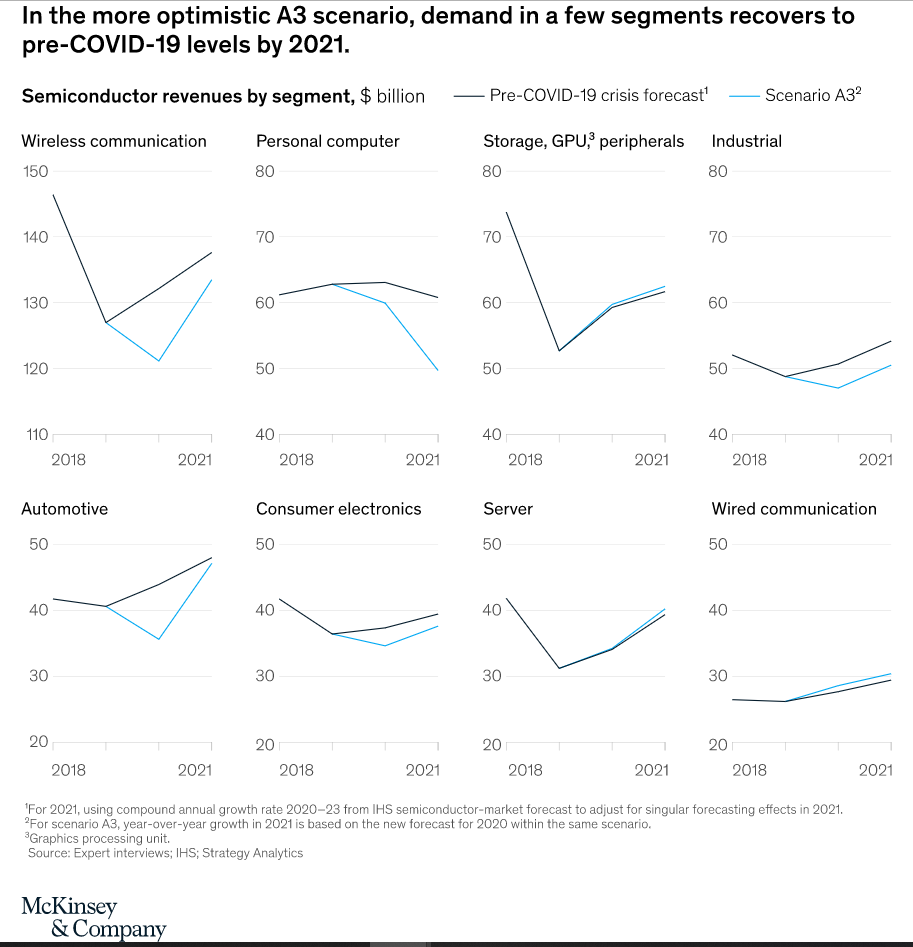

Many scenarios existed in the industry after the COVID-19, the three major ones are:

PCs and Laptops: This segment would see a steep decline in demand and performance gap will increase over time. You must be thinking that, as all of us need PCs for remote work, the demand should increase as sales increase. But the future aspect where most people buy electronics for 2020, demand steeply decreases for next 5 years.

Similarly, for automotive industry, it relies majorly on government policies and incentives. And this won’t be more than 1-5% as of 2021 and thus creates a scenario of lesser demand. And lastly, there was wired communications sector which may or may not see a demand fall, depending upon how optimistically home-schooling and remote work tend to grow or decline.

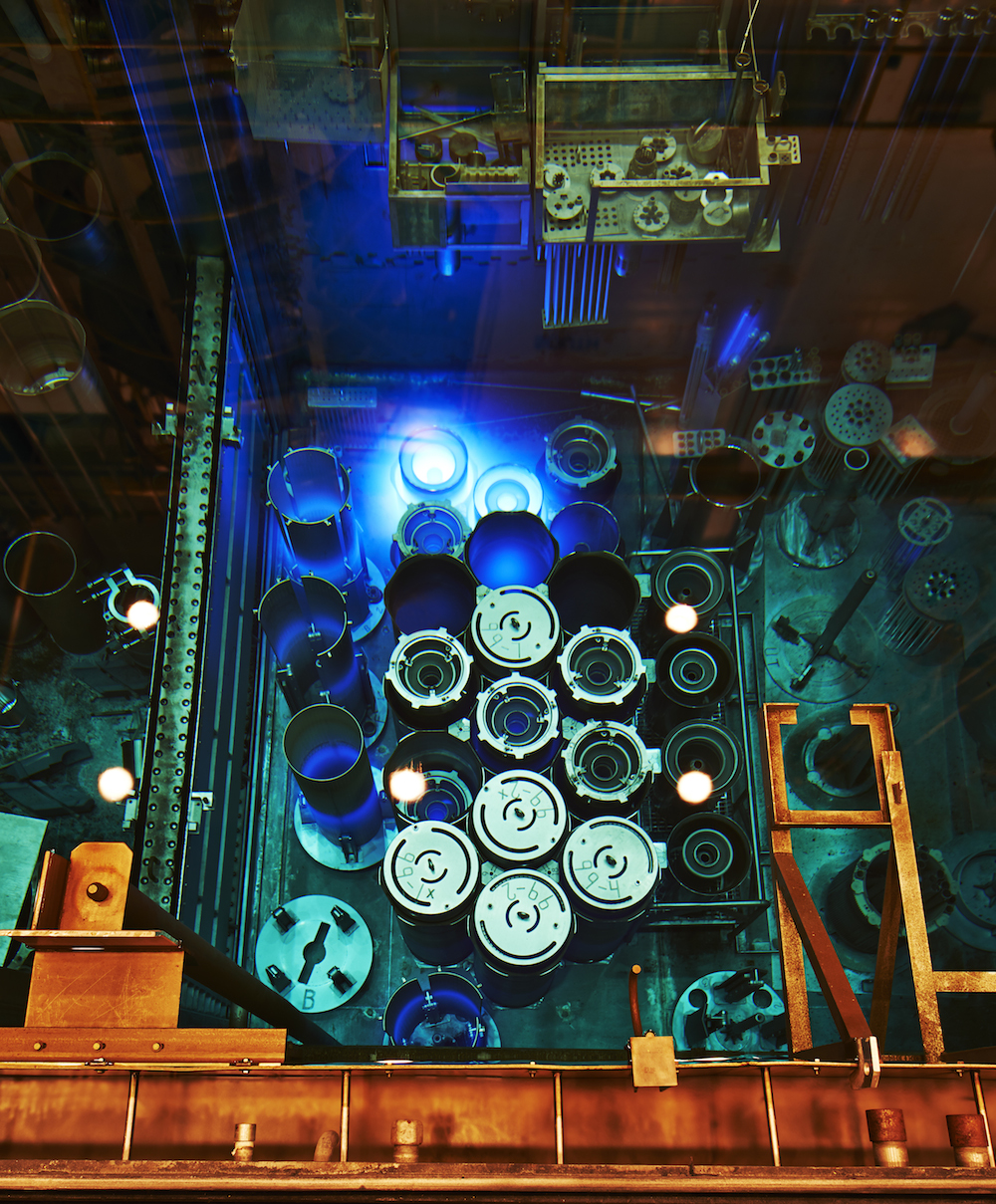

Simultaneously, the companies which produced automotive chips were idle during the pandemic and this resulted to manufacture of 1.28 million fewer vehicles due to global chip shortage. So, the above figures which saw a growth in semiconductor market largely came from PCs and laptop industry. Yes, Intel alone contributed for nearly $70 Billion dollars in 2020 with chip supply. Other major contributions were from Qualcomm, NVIDIA and Samsung.

[the_ad id=”7507″]

Disruption of Automotive Industry

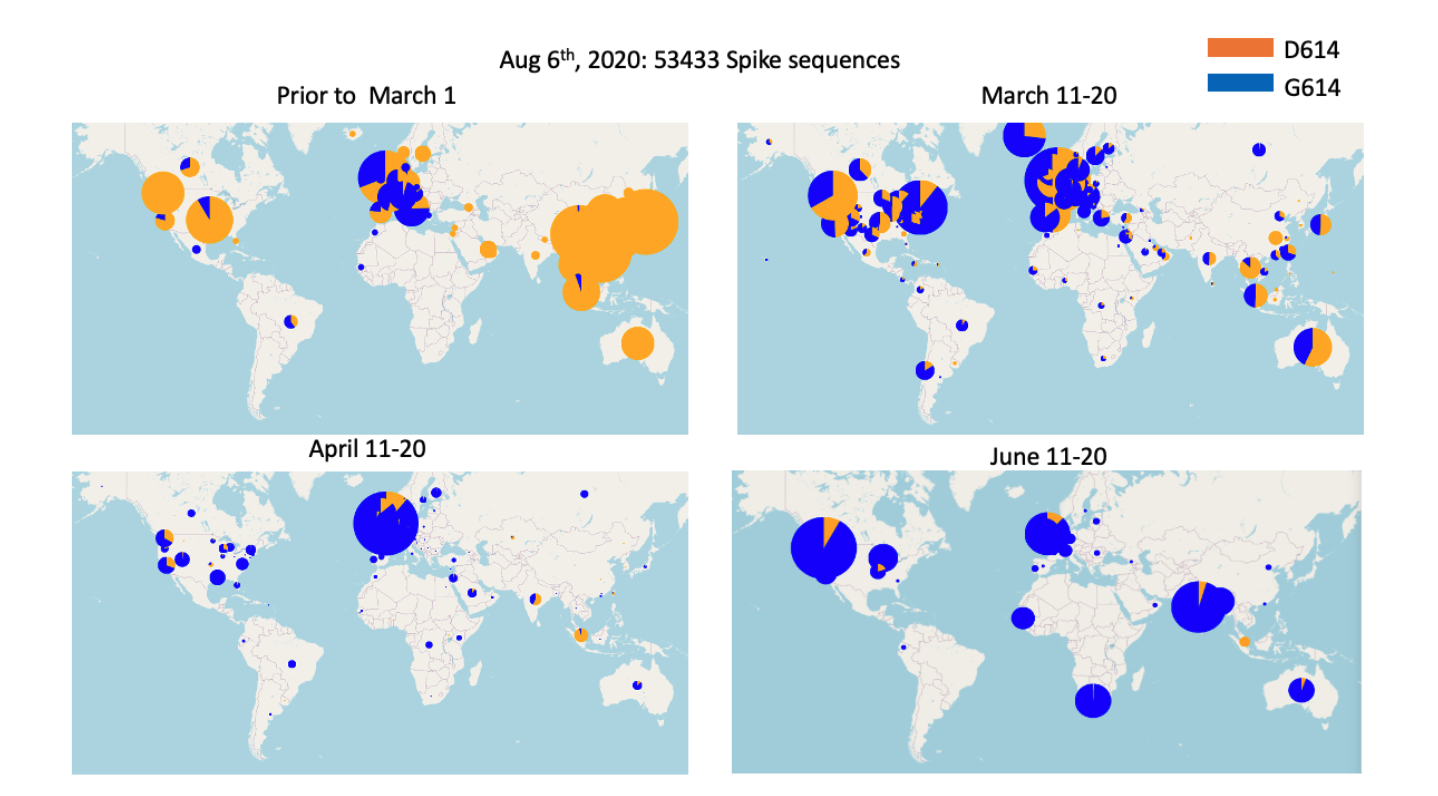

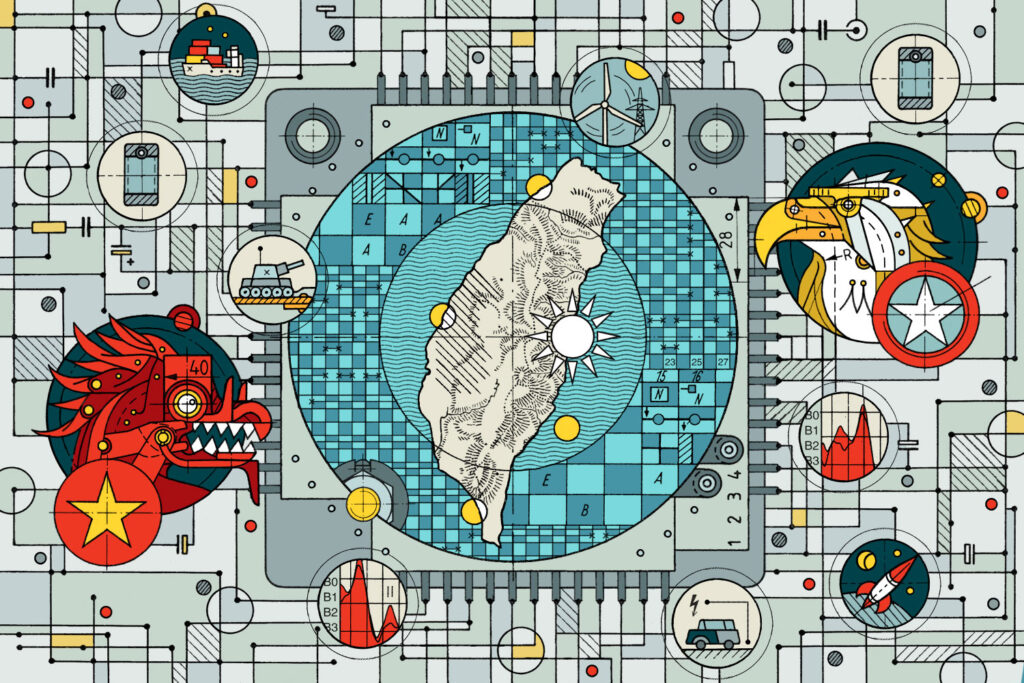

Due to lack of R&D and insufficient resources for production, lead to disruption of automobile industry. Even the US faced drastic effects of this and its biggest competitor, China became the semiconductor superpower at one point. Economically, China was causing troubles to the US income. This was causing it a $83 billion less income generation as China becomes strong in the semiconductor market.

China in its 14th five-year plan, explicitly outlined the goal of achieving “complete self-sufficiency” in semiconductors. This is all backed by state investments and discriminatory industrial policies. And, the US was angry on being hit by a 18%-point drop in global market share in its long-standing global leadership position. The situation was intense, politics was heating up and US needed to take some action.

The Semiconductor Legislation and Biden Government

“We’re working on that. (Senate Majority Leader) Chuck Schumer and, I think, (Senate Republican Leader) Mitch McConnell are about to introduce a bill along those lines,” Biden said during remarks about his own plan to boost the nation’s infrastructure. And then President held a meeting with CEOs of top-notch companies, including General Motors Co and Ford Motor Co along with White House officials Brain Deese and Jake Sullivan. The meet was virtually held with an agenda to discuss the infrastructure bill and semiconductor shortage.

Addressing Google/Alphabet, GM, and Intel; Biden said that the US must build its own infra to prevent future supply crisis. The White House said in a readout of the meeting that participants had “discussed the importance of encouraging additional semiconductor manufacturing capacity in the United States to make sure we never again face shortages.”

The event came on the heels of Biden’s February 24 executive order calling for a 100-day review across federal agencies on semiconductors and three other key items: pharmaceuticals, critical minerals and large capacity batteries. Policymakers are focused on building additional semiconductor capacity in the United States, but experts say there are limited remedies in the near-term. Biden also pointed to the semiconductor shortage as he seeks to build a case for a $2 trillion infrastructure package.

Fighting China: Its International Politics

While Biden introduced these packages, the ‘China Challenge’ was introduced. This focused on leveraging smart, multilateral and well-tailored policies which can lead the US to compete even more strongly on a global stage. Incentivizing manufacturing of advanced semiconductors in the U.S. remines a key pillar of any such strategy. “I’ve been saying for some time now, China and the rest of the world is not waiting. And there’s no reason why Americans should wait,” the president emphasized.

And prior to this meeting, a $50 billion dollar infrastructure plan for a new commerce department office supporting production of critical goods was already handed out by the White House. Side-by-side, a backed congressional legislation to invest another $50 billion dollars in semiconductor research and manufacture was rolled-out.

[the_ad id=”7507″]

Semiconductor War: Impact on India

India has always been on a side-line in such world political matters, especially when technological giants are involved. This impacts India economically and intellectually. The prices for electronic products always increase after such global wars. Trades become hard, imports of goods increase and economy affects in one or the other way. Due to lack of R&D for India in semiconductors market, the skilled and experienced intellectuals tend to leave the country for better opportunities. All this, in turn makes a country like India decades behind the techno-superpowers of the world.

Though, the big question prevails here; if it can impact semi-powerful countries like India in this way, what this can do to even smaller GDPs? Weapons war was over. Biological war is predicted. And, the techno-war is being fought. Think!

[the_ad id=”7507″]